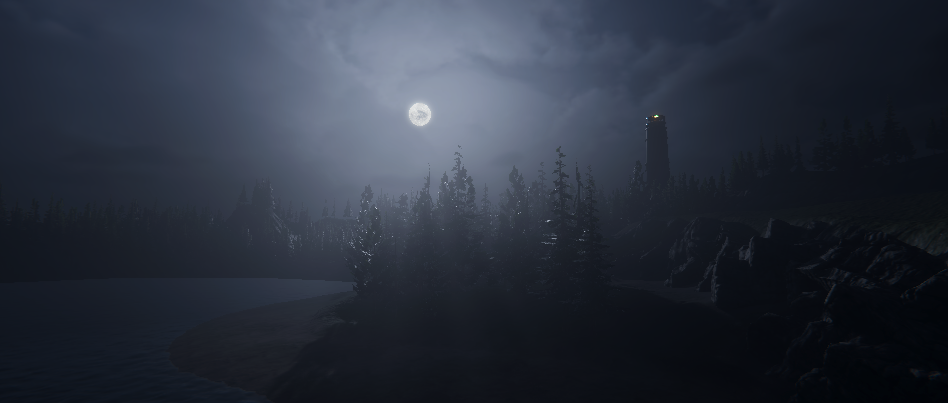

Objective

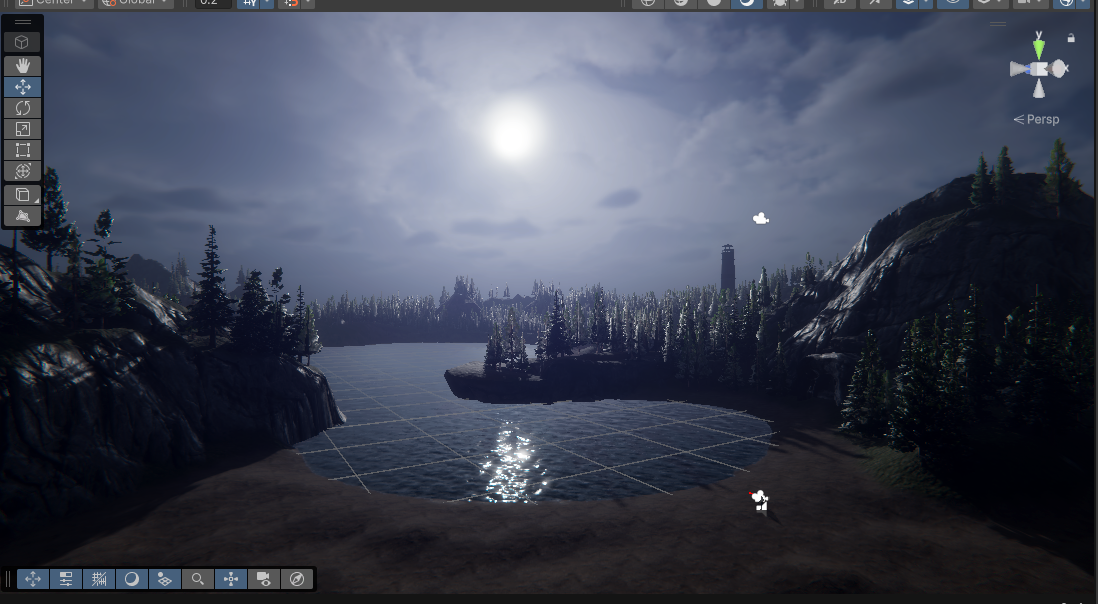

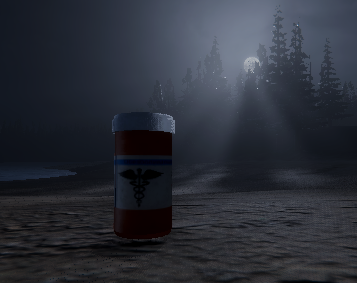

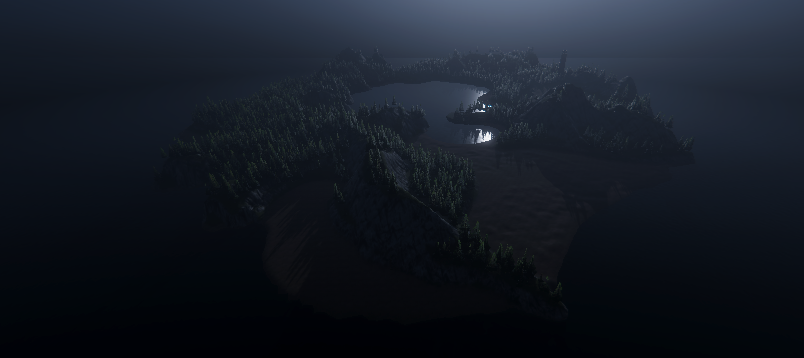

The primary objective of this project was to explore the intersection of psychological horror and virtual reality, moving away from traditional jump-scares to focus on sustained atmospheric dread. Developed as a solo endeavor at University of Salford, the goal was to create a highly immersive environment where player agency is driven by tactile interactions and sensory feedback. By prioritising spatial audio and physical presence, the project aimed to dmeonstrate how environmental storytelling can be used to manipulate playeur psychology and maintain tension within a 360-degree digital space.